PhD Monash Manoranjan Paul received the BSc Engineering (honors) degree in Computer Science and Engineering from Bangladesh University of Engineering and Technology (BUET) and the PhD degree from Monash University, Australia. He has also completed Graduate Certificate in University Leadership and Graduate Certificate in Learning & Teaching in Higher Education from Charles Sturt University (CSU). Currently, Manoranjan Paul is a Professor in Computer Science, Director of Computer Vision Lab and the Head of Machine Vision and Digital Health (MAVIDH) Research Group at CSU. Prof Paul is the recipient of the ICT Researcher of the Year 2017 and a Golden Disruptor Award from the Australian Computer Society (ACS). Currently, he is an Associate Editor of international journals: IEEE Transactions on Multimedia (JCR Q1 and Rank 1 in Multimedia), IEEE Transactions on Circuits and Systems for Video Technology (JCR Q1 and Rank 2 in Multimedia), and Eurasip Journal on Advances in Signal Processing (JCR Q2). Previously Prof Paul was an Assistant Professor at Ahsanullah University, Lecturer at Monash University, Post-Doctoral Research Fellow at University of New south Wales (UNSW), Monash University, and at Nanyang Technological University (NTU), Singapore. He was an Associate Director of Centre for Research in Complex Systems (2013-17). His major research interests are in the fields: Prof Paul has published around 200 fully refereed international publications including 70+ journals. He has successfully supervised 16 PhD in completion including 6 as a principal supervisor. He obtained $3.6 million competitive external grant money including Australian Research Council (ARC) Discovery Project (DP19 & DP13), Australia-China, Soil CRC, NSW Government, Wine Australia, and NSW DPI grants. Prof Paul was an invited Keynote speaker in the international conferences: IEEE DICTA’17 & ‘13, CSWN’17, IEEE WoWMoM’14, and IEEE ICCIT’10. He has organized international conferences: PSIVT’19 as a General Chair, DICTA’18 as a Program Chair, PSIVT’17 as a Program Chair, and DICTA’16 as a Publicity Chair. He is a Senior Member of the Institute of Electrical and Electronics Engineers (IEEE) and Australian Computer Society (ACS). For detailed information, please visit his personal website: http://csusap.csu.edu.au/~rpaul/. Researchers: Prof Manoranjan Paul (CSU), Dr. Michael Antolovich (CSU), and Dr. Zavid Parvez (ISI Foundation, Italy and Prof Paul’s previous PhD student at CSU) An Electroencephalogram (EEG) is a non-invasive graphical record of ongoing electrical activity captured from the scalp which is produced by firing of neurons of the human brain due to internal and/or external stimuli. It has great potential for the diagnosis to treatment of mental disorder and brain diseases. We are focusing on detecting and predicting of epileptic seizure period by analysis EEG signals. We are looking for other applications of EEG signals. Outcome: We are able to predict epileptic seizure by 15 minutes earlier with 90% accuracy when we use invasive EEG signals. I successfully supervised a PhD project. We published 14 papers in this area including two journals in IEEE Transactions on Biomedical Engineering and IEEE Transactions on Neural Systems and Rehabilitation Engineering. Researchers: Prof Manoranjan Paul (CSU), Professor Manzur Murshed (FedUni), A/Professor Weisi Lin (NTU), Dr. Mortuza Ali (FedUni), Dr. Tanmoy Debnath (CSU), Dr. Subrata Chakraborty (USQ), Shampa Shahriyar (PhD student, Monash University), Pallab Podder (PhD student, CSU) and Niras C.V. (PhD student, Macquarie University) Video conferencing, video telephony, tele-teaching, tele-medicine and monitoring systems are some of the video coding/compression applications that have attracted considerable interest in recent years as 3D video is now reality. The burgeoning Internet has increased the need for transmitting (non-real-time) and/or streaming (real-time) video over a wide variety of different transmission channels connecting devices of varying storage and processing capacity. Stored movies or animations can now be downloaded and many reality-type interactive applications are also available via web-cams. In order to cater for devices with different storage and transmission bandwidth requirements, raw digital video data need to be coded at different bit rates with different timing complexity. Sometimes for realistic views of the scene of action, we also need to encode/ compress/ process videos with multiple cameras (multi-view) from different angles. We are focusing on video coding and compression in different scales suitable for different devices with accepted quality and computational time. Outcome: An ARC DP13 & DP19 which partially solve interactive problem for 3D video call. Our contributions have been adapted in the latest video coding standards e.g. HEVC, VP9, AVS. Published 4 IEEE Transactions. Supervising three PhD projects on it. Researchers: Prof Manoranjan Paul (CSU), Professor Manzur Murshed (FedUni), A/Professor Weisi Lin (NTU), and Dr. Subrata Chakraborty (USQ) Object detection from the complex and dynamically changing background is still a challenging task in the field of computer vision. Adaptive background modeling based object detection techniques are widely used in machine vision applications for handling the challenges of real-world multimodal background. But they are constrained to specific environment due to relying on environment specific parameters, and their performances also fluctuate across different operating speeds. Camera motions of a scene makes dynamic background modeling more complicated. We are focusing on dynamic background modeling in challenging environments and their applications in different fields. Outcome: We are able to extract background from a challenging environment. We have successfully applied background modeling in video coding and video summarization. Published 2 IEEE Transactions. Supervised one PhD project to completion. Researchers: Prof Manoranjan Paul (CSU), Dr. Tanmoy Debnath (CSU), and Dr Pallab Podder An eye tracker is a device to capture eye movement and its duration. In my lab, we have an eye tracker. We are focusing on various research issues e.g., Abnormal event detection and prediction, speech/alcohol/drug impairment detection, retail/advertising effectiveness, video communication for deaf people, eye can be used as an input device, improving electronic document design, increasing user acceptability of learning technologies, telemedicine, and remote surgery. Outcome: We are able to determine human engagement using eye tracker. We are able to find some facts of marker discrepancy in multiple markers scenarios. We are also able to assess quality of a video when there is no reference. We have published three papers in this area. Researchers: Prof Manoranjan Paul (CSU), Professor Junbin Gao (University of Sydney), Professor Terry Bossomaier (CSU), and Dr Rui Xiao (CSU) Hyperspectral imaging sensor divides images into many bands which can be extended beyond visible. The applications of hyperspectral imaging are in the fields of military to detect chemical weapon, geological survey, agriculture to quantify crops, mineralogy to identify different minerals, physics to identify different properties of materials, surveillance to detect different objects and reflections, measurement of surface CO2 emissions, mapping hydrological formations, tracking pollution levels, and more. We are investigating different applications of hyperspectral imaging for example Fruit-life span prediction. Outcome: We are able to model a spectral predictor and develop a context-adaptive entropy coding technique which give us up to 7 compression ratio in some images whereas the current state-of-the-art methods only can provide around 3 compression ratio. We published 4 papers in area including PLoS ONE 2016 journal. Researchers: Prof Manoranjan Paul (CSU) and Dr MD Salehin In our daily life we capture video for various purposes such as security, entertainment, monitoring, investigating and so on. It requires huge memory space to store as well as enormous time to retrieve or replay important information manually from this high volume of videos. In this project, we explore the various features based on scene content, scene transition, human visual systems to extract important importation for particular application and make a shorter version of the video. Outcome: We are able to summarize different type videos including surveillance, sports, movie, etc. We published 10 papers in this area including PLOS ONE 2017, JOSA A 2017. Researchers: Prof Manoranjan Paul (CSU) and Chris Williams (Honors student, CSU) Machine vision is now being extensively used for defect detection in the manufacturing process of collagen-based products such as sausage skins which is a multimillion dollar industry worldwide. At present the industry standard is to use a LabView software environment, whereby a graphical interface is used to manage and detect any defects in the collagen skins. Available data corroborates that this method allows for false positives to appear in the results where creases or folds are resolved to be defects in the product instead of being a by-product in the inspection process. This is directly responsible for reducing the overall system performance and resulting wastage of resources. Hence novel criteria were added to enhance the current techniques used with defect detection as elaborated in this paper. The proposed improvements aim to achieve a higher accuracy in detecting both true and false positives by utilizing a function that probes for the color deviation and fluctuation in the collagen skins. From the operating point of view, this method has a more flexible approach with a higher accuracy than the original graphical LabView program and could be incorporated into any programming environment. After implementation of the method in a well-known Australian company, investigational results demonstrate an average 26% increase in the ability to detect false positives with a corresponding substantial reduction in operating cost. In recent years, free viewpoint video (FVV) or Free Viewpoint Television (FVT) technology is increasing popularity for rendering intermediate views from existing adjacent views to avoid the large volume of video data transmission. For generating an intermediate view on a remote display, Depth Image Based Rendering (DIBR) techniques are normally used, however, sometimes it has some missing pixels due to inaccurate depth, low precision rounding error and occlusion problem. To address the issue, we are working on hole filling technique, view rendering, and depth coding, 3D video formation. Outcome: I can reconstruct a better virtual view compared to the existing one. I have published 12 papers on this area including IEEE Transactions on Image Processing (Rank 1 in Signal Processing, CORE A*). Outcome: Our unsupervised vessel extraction method for detecting vessel provides comparable results compared to the popular supervised methods. I have published 5 papers on this area including journals in Signal, Image and Video Processing 2017 and Pattern Analysis and Application 2017. Falling towards camera Fall Detection: Some challenging scenario where the existing method fails as it considers as fall for sweeping floor, picking object but the proposed method is successful. AR on Oral and Maxillofacial Surgeries and AR for Visualising the Blood Vessels in Breast Implant Surgeries Successful, timely diagnosis of neuropsychiatric diseases is key to management. Research efforts in the area of diagnosis of Alzheimer’s disease have used various aspects of computer-aided multi-class diagnosis approaches with varied degrees of success. However, there is still need for more efficient and reliable approaches to successful diagnosis of the disease. We are conducting research to diagnose the Alzheimer’s disease so that we are able to detect problem earlier. Outcome: We use k-sparse autoencoder method with deep learning framework for this purpose. The results show promising results. I am supervising a Professional Doctorate project on it. I have published 1 paper on this area. The aim of the project is to develop a non-contact cardiopulmonary detection method in natural disaster rescue relief, based on the use of frequencies and antennas that are similar to current smartphone-like devices. Current focuses are in particular on the current Wi-Fi 2.4 GHz and 5 GHz radio frequencies wavelength, Doppler effect radar, signal processes, and a signal noise cleansing technique that detects and measures vital signs. This approach could be used as an alternative to the commercially designed, devices currently used for heartbeat and respiration detection during rescue relief with the aim of reducing device transit time and cost. This project further evaluates a new type of multi-directional antenna that could be used to detect the human physiology pulsatile from 360o degree view. Outcome: I am supervising a DIT project on it. In computer vision algorithm, visibility of the video frames is very import to perform accurately. Visibility of a video can be affected by several atmospheric interference in challenging weather, one of them is rain streak. In recent time, rain streak removal achieves lots of interest to the researchers as it has some exciting applications such as autonomous car, intelligent traffic monitoring system, multimedia, etc. We extract rain features such as temporal appearance, rain shape, etc. to filter out rain from the video. Outcome: Prof Manoranjan Paul is supervising a PhD project on it. Due to the availability of powerful image-editing software and the growing amount of multimedia data which is transmitted via the Internet, integrity verifications and confidentiality of the data are becoming important issues. However, the accuracy of detecting and the recovery capability of the tampered images by the existing methods are still not at the required level for the wide amount of tampering. We use a new blind and fragile watermarking method to detect tampering and better. Outcome: Prof Manoranjan Paul is supervising a PhD project on it. Parkinson’s disease (PD) is a progressive neurodegenerative movement disease affecting over 1% of people by the age of 60 and is the second most commonly occurring neurodegenerative disease in the elderly, with an estimated 6 million sufferers worldwide (as at 2017). The loss of dopamine-producing neurons in PD results in a range of both motor (that is, referring to motion) and non-motor symptoms, and currently a patient can have the disease for 5 to 10 years before it is diagnosed, by which time the majority of the neurons in the affected part of the brain may have already been lost. The project investigates that this disease could be detected through changes in the characteristics of a person’s typing. Outcome: Prof Manoranjan Paul is supervising a DIT project on it. Session Chair/PC member: Professorial Staff3803983

Professor Manoranjan Paul

Teaching Responsibilities

Grants

Research Focus

Project 1: EEG Signal Analysis

Project 2: Video Coding and Compression

Project 3: Object Detection and Background Modeling

![]()

Project 4: Eye tracking Technology

Project 5: Hyperspectral Imaging

Project 6: Video Summarising

Project 7: Collagen Defect Detection

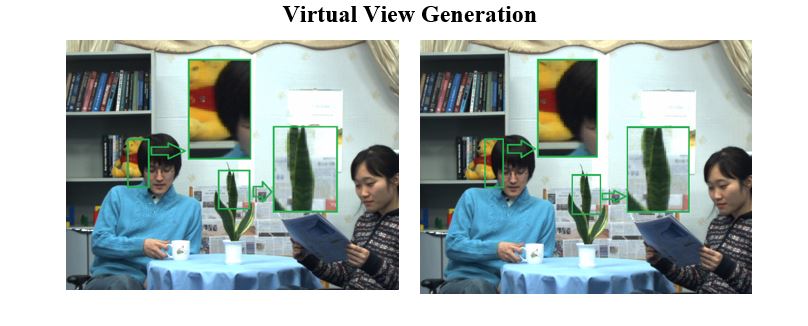

Project 8: View Synthesis for 3D video and Free Viewpoint Video

Project 9: Medical Imaging

Retinal Vessel Extraction: Analysis of output images:

(a) ground truth (right eye image), (b) output image (right eye image),

(c) ground truth (left eye image), and (d) Output image (left eye image).

Falling from a chair

Falling sideways

Picking up object

Sitting on sofa

Sweeping floor

Upper front teeth image registration

Lower front when patient moves

Image overlaying if patient moves

If surgical instrument moves

When surgical instrument moves

Mandibular reconstruction

If surgical instrument moves

Image overlaying if patient moves

Project 10: Early Diagnosis of Alzheimer’s Disease

Project 11: Cardiopulmonary measurement using the smartphone

Project 12: Rain removal from video

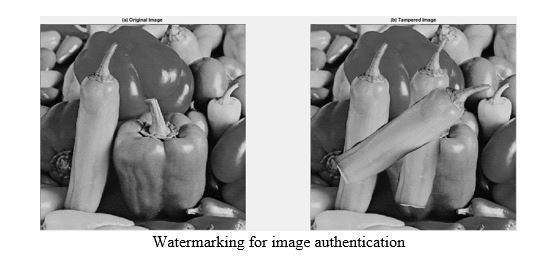

Project 13: Watermarking for image authentication

Project 14: Parkinson’s disease detection using keystroke characteristics

Professional Activities

ARC (Australian Research Council) Assessor Since 2013

Recent Graduated / Current PhD Students

Journal Editor

Keynote Speaker

International Journal Reviewer

Membership

Seminar presentation at different International Conferences such as